Google Function is a new concept called functions as a service (FaaS). This service allows you to run your code with zero server management. Cloud Function allows you to focus on your code and don’t worry about hardware or software issues. I really like the concept that you will be billed for your function’s execution time, metered to the nearest 100 milliseconds. You pay nothing when your function is idle. Cloud Functions automatically scale up and down in response to events.

Before start using Cloud Function you would need to make that your GCP has Cloud Function API enabled. The following command will enable it.

gcloud services enable cloudfunctions.googleapis.com \

--project=<your-project-id>Now let’s connect to your GCP Power Shell (make sure you read my previous post about setting connection with GCP via Visual Studio Code using Ubuntu instance) and create a new directory and a file main.py.

gcloud beta cloud-shell ssh

mkdir newdir

cd newdir

nano main.pyCopy$paste the following script in main.py save and exit, this scrip is taken from a Google post (https://cloud.google.com/functions/docs/calling/storage#functions-calling-storage-python) that discusses cloud storage triggers.

def hello_gcs(event, context):

"""Background Cloud Function to be triggered by Cloud Storage.

This generic function logs relevant data when a file is changed.

Args:

event (dict): The dictionary with data specific to this type of event.

The `data` field contains a description of the event in

the Cloud Storage `object` format described here:

https://cloud.google.com/storage/docs/json_api/v1/objects#resource

context (google.cloud.functions.Context): Metadata of triggering event.

Returns:

None; the output is written to Stackdriver Logging

"""

print('Event ID: {}'.format(context.event_id))

print('Event type: {}'.format(context.event_type))

print('Bucket: {}'.format(event['bucket']))

print('File: {}'.format(event['name']))

print('Metageneration: {}'.format(event['metageneration']))

print('Created: {}'.format(event['timeCreated']))

print('Updated: {}'.format(event['updated']))Now we can deploy the main.py function. The object finalizes events trigger when a “write” of a Cloud Storage Object is successfully finalized. Particularly this means creating a new object or overwriting an existing object triggers this event. Make sure you run this from the folder with your main.py file, otherwise you can get the following error “(gcloud.functions.deploy) OperationError: code=3, message=Build failed: missing main.py and GOOGLE_FUNCTION_SOURCE not specified. Either create the function in main.py or specify GOOGLE_FUNCTION_SOURCE to point to the file that contains the function; Error ID“

gcloud functions deploy hello_gcs \

--runtime python38 \

--trigger-resource YOUR_TRIGGER_BUCKET_NAME \

--trigger-event google.storage.object.finalizeYou might get an error “ERROR: (gcloud.functions.deploy) You do not currently have an active account selected.” Please use gcloud auth login to access your GCP account. You will be given a link that you need to copy&paste in your browser and log in to your GCP account. Next, copy&paste the provided code in your bash. You should see the following message “You are now logged in as [your@email.com]” and “Your current project is [your-project]“.

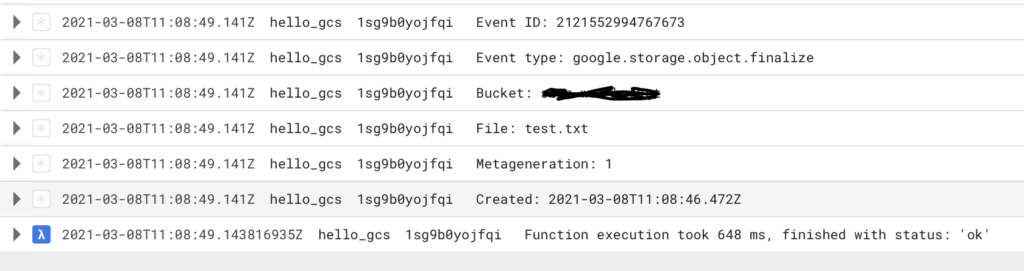

Now let’s test our function. Let’s create an empty test.txt file in the directory where the sample code is located and upload the file to Cloud Storage in order to trigger the function.

nano test.txt

gsutil cp test.txt gs://YOUR_TRIGGER_BUCKET_NAMEChecking the logs to make sure the executions have been completed.

Our function is working well. We can see it prints out the log information after we uploaded the test.txt file to the bucket.